AI is transforming the ways data is summarised, explored and analysed, acting as a powerful assistant to speed up generic work processes. The promises of productivity gains and a shift to higher-order work are real. However, as impact measurement increasingly integrates these tools, a crucial distinction is often lost. During a recent AVPN’s session on AI’s applications in our field, a key insight crystallized for me: AI remains powerfully confined to processing large data sets, while the fundamental research tasks of defining the what, who, and how lie firmly outside its core capabilities. Let me explain:

The ‘What’

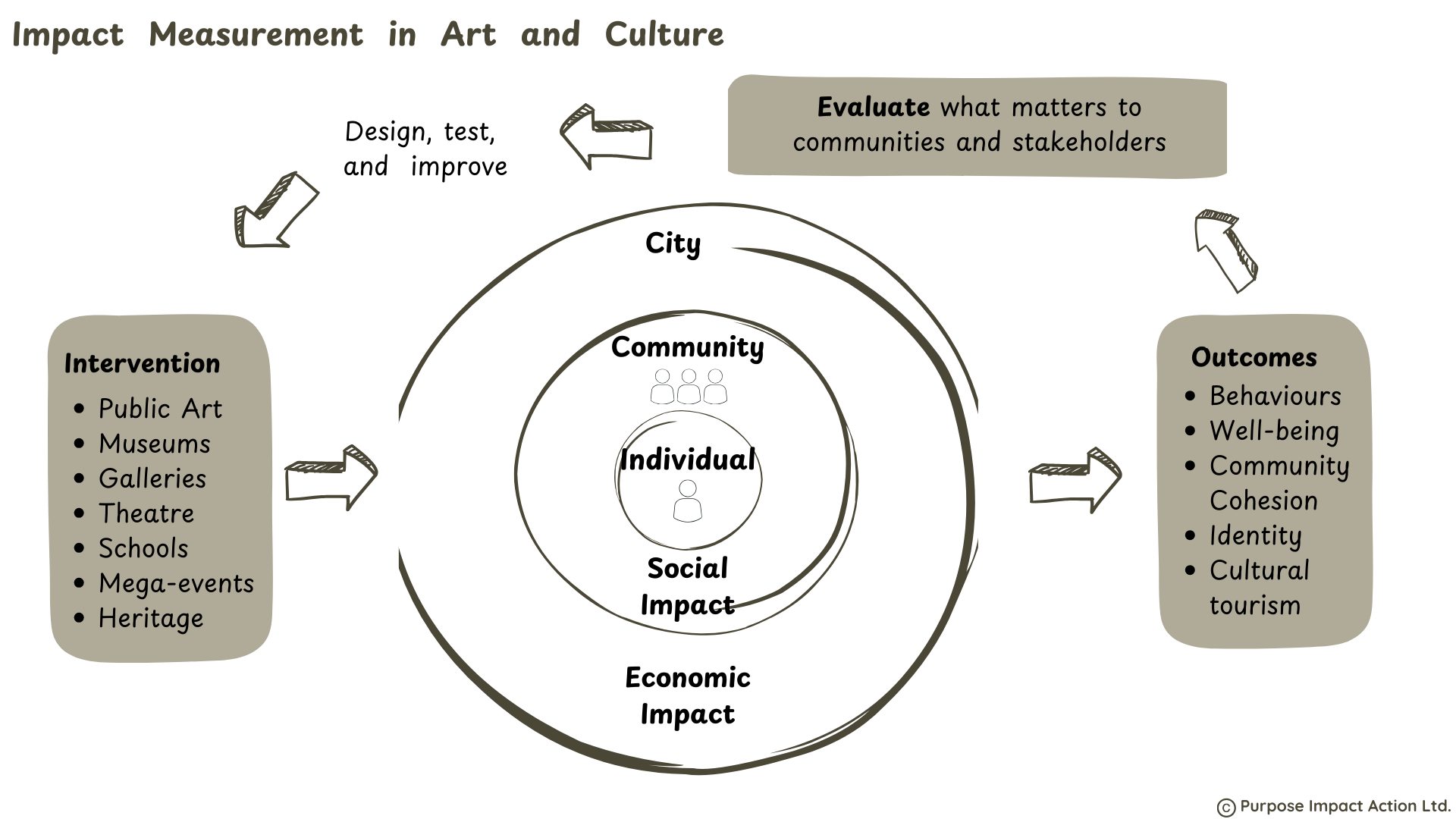

The ‘What’ research questions are the cornerstone of impact measurement. They define the specific impacts being investigated, set the study’s scope, and provide the essential framework for methodology design, data collection, and analysis. Without them, impact measurement is unfocused, leading to reporting irrelevant findings and wasted resources.

However, identifying the ‘what’ requires a deep understanding of context and stakeholder values, which can only be achieved through genuine human engagement. This means facilitating rich discussions – via interviews and focus groups – with both funders and operational staff on the one side, and grantee organisations and their programme delivery staff on the other. These conversations are essential to tease out the varying perspectives on the content of programme activities, their delivery (timing, dosage), and the appropriate target groups to generate the Theory of Change that will be tested.

For example: Consider a school literacy programme. An AI could surface a standard Theory of Change focused solely on improving test scores. However, only through dialogue with teachers and community leaders would researchers discover that a core community priority is not just academic performance, but also fostering a joy of reading and student confidence. A funder’s initial ‘What’ might be “Did the programme improve test scores by 10%?” While important, human engagement refines this into a more meaningful set of questions: “To what extent did the programme improve reading fluency and increase students’ self-reported confidence and enjoyment of reading?” This nuanced ‘What’, born from dialogue, ensures the measurement captures the programme’s true, multifaceted impact.

While AI can assist by surfacing existing theories, it cannot replace the human stakeholders who must ultimately validate the soundness of the research questions. In short, the ‘What’ is born from dialogue and shared purpose, not data patterns

The ‘Who’

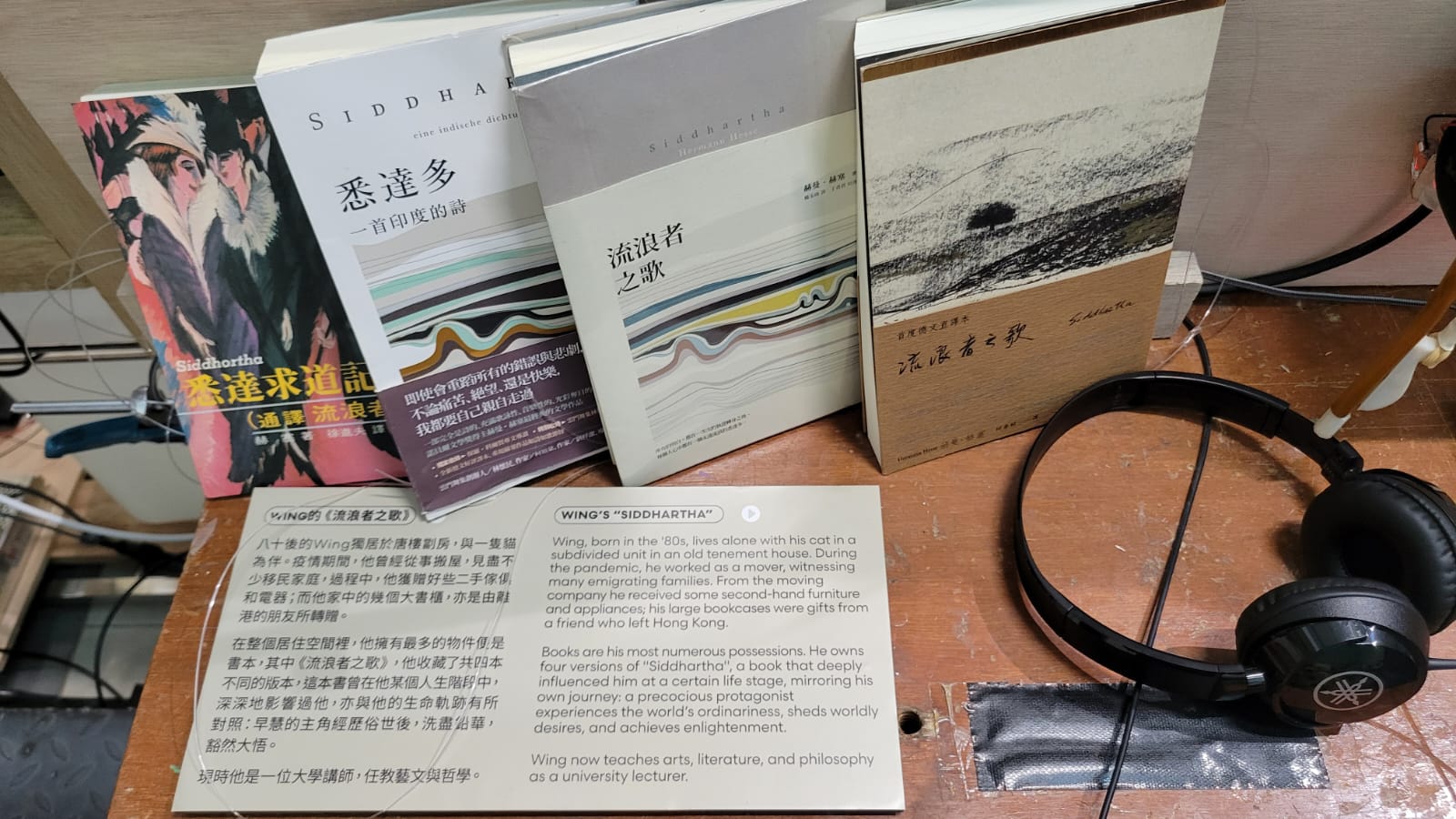

AI can segment and analyse demographic data, but it cannot grasp the human realities behind the data. As such, it remains blind to the cultural nuances, power dynamics and intergenerational perspectives that define and differentiate stakeholder groups. Acquiring this understanding is fundamental to developing a balanced and unbiased impact framework. This ensures that the measurement itself does not perpetuate existing inequalities by, for instance, overlooking marginalised voices or misrepresenting community priorities.

AI can identify who the stakeholders are on a spreadsheet, but only humans can understand who they are in the context of the intervention and ensure their values are authentically represented.

For example: an AI analysing school data can quickly identify “parents from low-income households” as a key stakeholder group. However, only through human engagement can researchers understand that many of these parents work multiple jobs with inflexible hours, often in shifts that conflict with standard school events. An impact survey distributed only via email or interviews scheduled during the school day, based on the AI’s data analysis, would systematically exclude them. A human researcher, understanding these logistical constraints, would ensure participation by offering surveys in multiple formats (e.g., paper, short mobile-friendly versions) and interviews or focus groups at more accessible times (including early mornings or weekends). his approach authentically captures this group’s voice, which would otherwise be lost.

The ‘How’

AI can suggest methodologies, but it cannot exercise judgment in the field. The “How” of impact measurement methodology – adapting to unforeseen challenges, practicing cultural sensitivity, and ensuring “Do No Harm” principles – requires human empathy and adaptability.

Selecting the right research tools is a strategic exercise in alignment and efficiency. It requires triangulating three key elements: the research questions, the nature of the stakeholder groups, and existing secondary evidence. The goal is to design an approach that gathers robust data without overburdening participants. While AI can be prompted to generate a survey based on keywords, it cannot ensure this critical alignment. It remains limited in its ability to coherently connect data collection tools –like surveys, focus group protocols, or interview guides – back to the strategic pillars of the project, such as the Theory of Change or logic model. A tool might be technically sound but strategically misaligned, collecting data that is interesting but ultimately irrelevant to the core impact questions

When the core of our work relies on this deeply human understanding and strategic alignment, we must ask ourselves:

Is faster necessarily better?

By Marianna Lemus Boskovitch, Senior Consultant, Purpose Impact Action

Share your thoughts in the comments below